[ad_1]

Something to look forward to: Many consumers are looking forward to this year’s new graphics cards for their gaming capabilities, but they also introduce new tools for video encoding. Nvidia’s RTX 4000 series GPUs add one more trick to double framerates while encoding video.

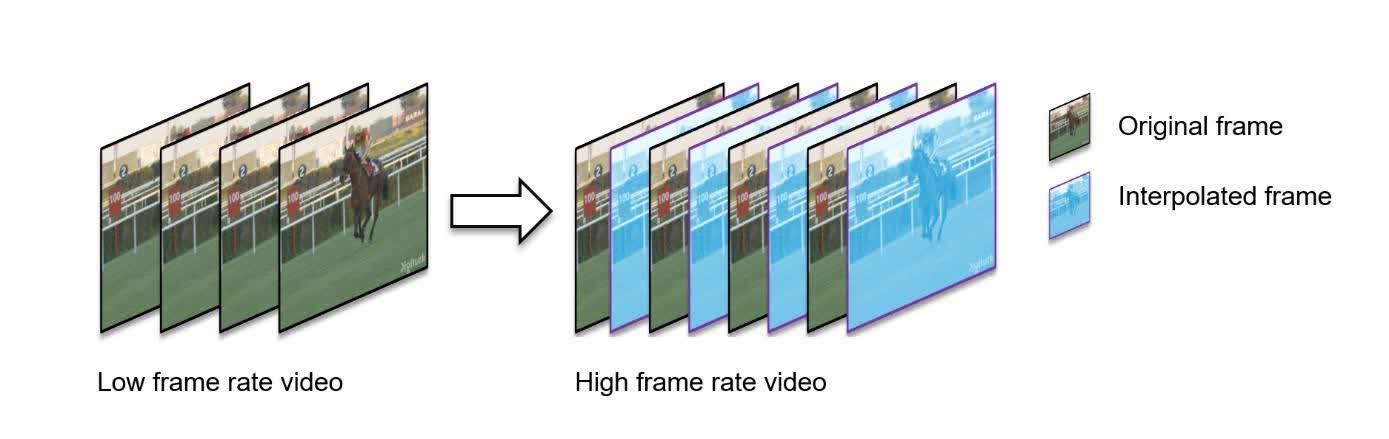

The Optical Flow Accelerators behind Nvidia’s new DLSS 3 feature won’t just increase video game framerates. Content creators can also use the technology to artificially increase framerates in the videos they encode.

While DLSS 2.0 uses the Tensor Cores in Nvidia’s RTX 2000 and 3000 GPUs to generate new pixels through machine learning, DLSS 3 uses the 4000 series’ Optical Flow Accelerators to build entire new frames. PC games that support DLSS 3 can double their framerates on top of DLSS 2.0’s performance gains, but Nvidia’s tech can bring the same improvements to videos.

Motion vectors are one tool DLSS uses to improve game framerates, and Nvidia also uses them in what it calls engine-assisted frame rate up-conversion (FRUC). Essentially, it’s a form of hardware-assisted motion interpolation. The concept is similar to how TVs can smoothen and interpolate motion, but RTX 4000’s CUDA cores and Optical Flow Accelerators make the process faster and more accurate. When interpolated frames have artifacts, image domain hole infilling techniques can fill them in to create an accurate final picture.

The FRUC library APIs support ARGB and NV12 input surface formats. They are also compatible with all DirectX and CUDA applications.

The enhanced motion interpolation could differentiate Lovelace from Intel’s Arc Alchemist series and AMD’s RDNA3 GPUs as all three introduce GPU-based AV1 encoding. Early tests show that AV1 has big advantages over H.264 encoding in terms of speed, data usage, and image quality. The new format lets streamers and content creators more efficiently encode higher-resolution videos. Unlike H.265, AV1 is also royalty-free.

Google is also pushing AV1 encoding as the format becomes increasingly important on YouTube. This week, the company released a significant update for its open-source AV1 encoder – AOM-AV1 3.5 – which now supports frame parallel encoding for greater numbers of threads. Depending on the video resolution and the number of processor threads, the update could cut encode times by between 18 and 34 percent.

[ad_2]