What just happened? IBM Research has recently published a paper announcing the development of an analog chip that promises to achieve GPU-level performance for AI inference tasks while significantly improving power efficiency. Currently, GPUs are extensively employed for AI processing; however, their high power consumption drives up costs unnecessarily.

The new analogue AI chip, which is still in development, has the capability to both compute and store memory in the same location. This design emulates the functioning of a human brain, resulting in improved power efficiency. The technology diverges from current solutions that necessitate constant data movement between memory and processing units, thereby slowing computational power and increasing power consumption.

In the company’s internal tests, the new chip exhibited a 92.81 percent accuracy rate on the CIFAR-10 image dataset when assessing the compute precision of analog in-memory computing. IBM asserts that this accuracy level is comparable to that of any existing chip using similar technology. What’s even more impressive is its energy efficiency during testing, consuming a mere 1.51 microjoules of energy per input.

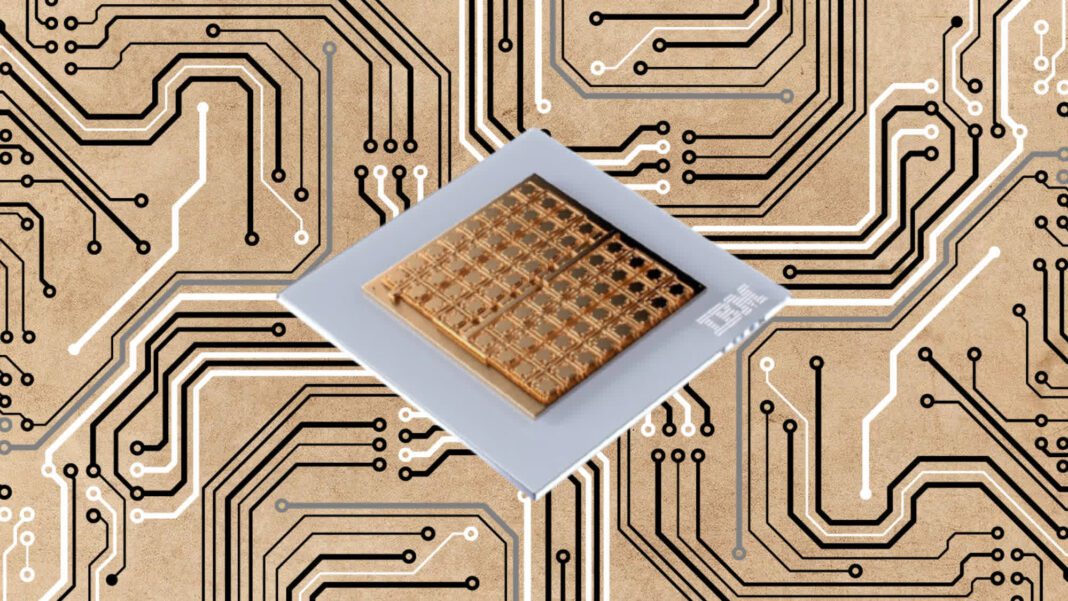

The research paper, published last week in Nature Electronics, also provides additional insights into the chip’s construction. The chip is built using a 14 nm complementary metal-oxide-semiconductor (CMOS) technology and features 64 analog in-memory compute cores (or tiles). Each core incorporates a 256-by-256 crossbar array of synaptic unit cells, and it has the capacity to perform computations corresponding to a layer of deep neural network (DNN) models. Additionally, the chip is equipped with a global digital processing unit capable of executing more intricate operations crucial for certain types of neural networks.

The new IBM chip is undeniably an intriguing advancement, particularly considering the exponential increase in power consumption among AI processing systems in recent times. Reports suggest that AI inferencing racks typically consume up to 10 times the power of regular server racks, resulting in exorbitant AI processing costs and environmental concerns. In this context, any improvement that enhances efficiency in the process would be warmly welcomed by the industry.

As an added benefit, a dedicated and power-efficient AI chip could potentially decrease the demand for GPUs, subsequently leading to reduced prices for gamers. However, it’s important to note that this is currently speculative, as the IBM chip is still in the development phase. The timeline for its transition into mass production remains uncertain. Until that happens, GPUs will persist as the primary choice for AI processing, making it unlikely that they will become more affordable in the near future.