[ad_1]

Forward-looking: A new report has revealed the enormous amount of Nvidia GPUs used by Microsoft and the innovations it took in arranging them to help OpenAI train ChatGPT. The news comes as Microsoft announces a significant upgrade to its AI supercomputer to further its homegrown generative AI initiative.

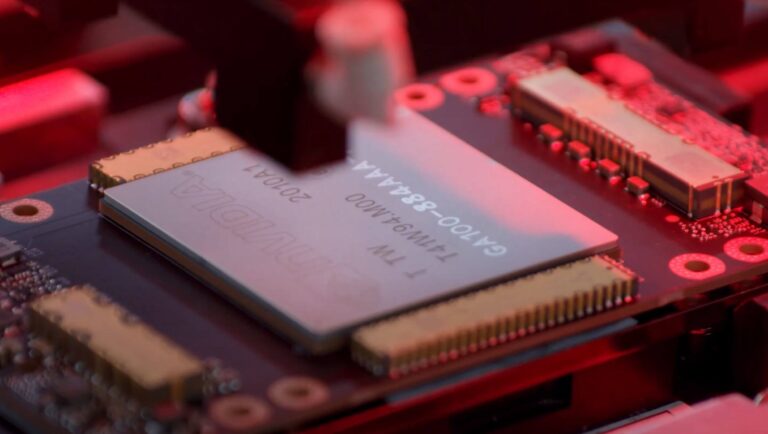

According to Bloomberg, OpenAI trained ChatGPT on a supercomputer Microsoft built from tens of thousands of Nvidia A100 GPUs. Microsoft announced a new array utilizing Nvidia’s newer H100 GPUs this week.

The challenge facing the companies started in 2019 after Microsoft invested $1 billion into OpenAI while agreeing to build an AI supercomputer for the startup. However, Microsoft didn’t have the hardware in-house for what OpenAI needed.

After acquiring Nvidia’s chips, Microsoft had to rethink how it arranged such a massive number of GPUs to prevent overheating and power outages. The company won’t say precisely how much the endeavor cost, but executive vice president Scott Guthrie put the number above several hundred million dollars.

Also read: Has Nvidia won the AI training market?

Simultaneously running all the A100s forced Redmond to consider how it placed them and their power supplies. It also had to develop new software to increase efficiency, ensure the networking equipment could withstand massive amounts of data, design new cable trays that it could manufacture independently, and use multiple cooling methods. Depending on the changing climate, the cooling techniques included evaporation, swamp coolers, and outside air.

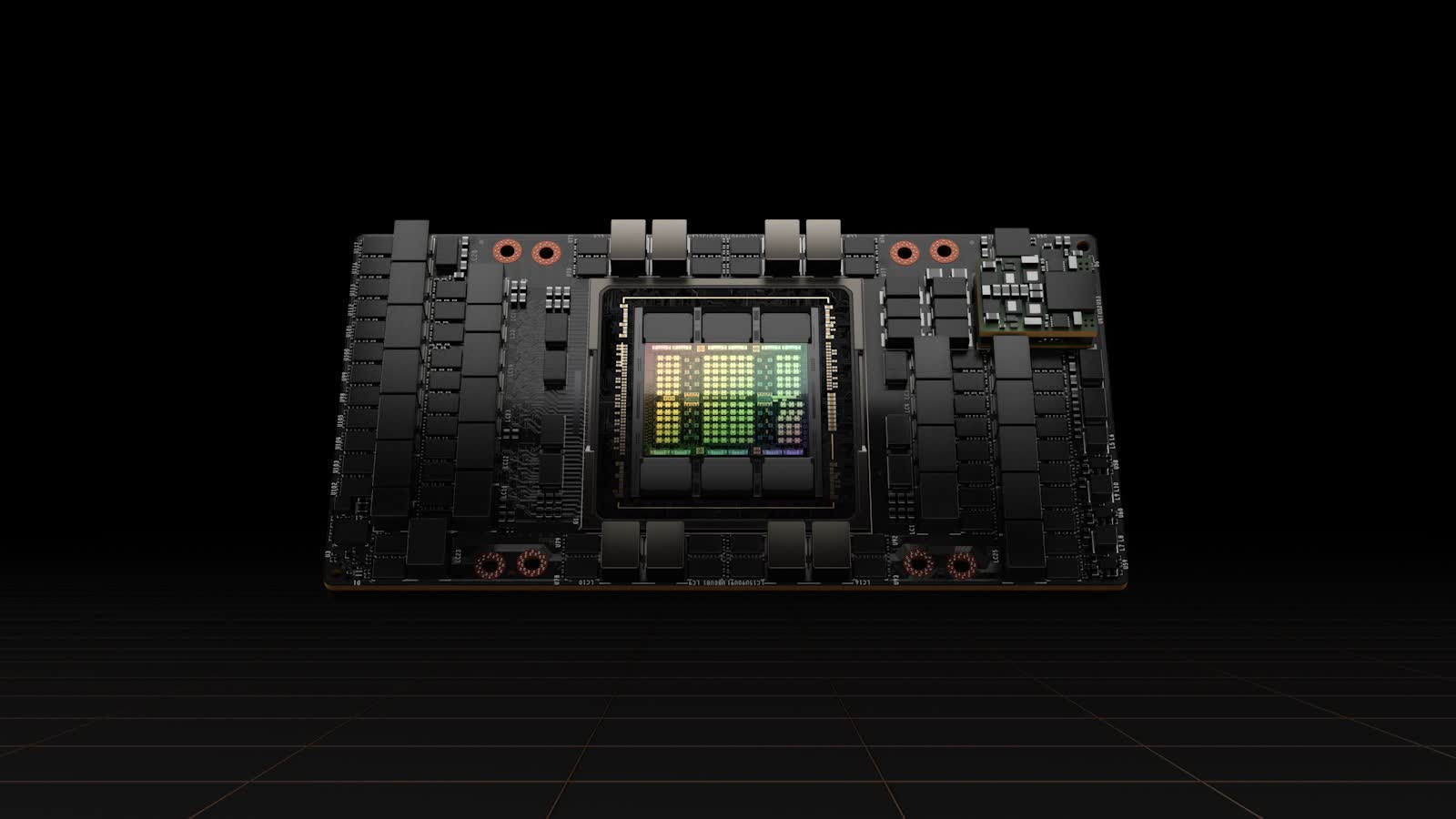

Since the initial success of ChatGPT, Microsoft and some of its rivals have started work on parallel AI models for search engines and other applications. To speed up its generative AI, the company has introduced the ND H100 v5 VM, a virtual machine that can use between eight and thousands of Nvidia H100 GPUs.

The H100s connect through NVSwitch and NVLink 4.0 with 3.6TB/s of bisectional bandwidth between each of the 8 local GPUs within each virtual machine. Each GPU boasts 400 Gb/s of bandwidth through Nvidia Quantum-2 CX7 InfiniBand and 64GB/s PCIe5 connections. Each virtual machine manages 3.2Tb/s through a non-blocking fat-tree network. Microsoft’s new system also features 4th-generation Intel Xeon processors and 16-channel 4800 MHz DDR5 RAM.

Microsoft plans to use the ND H100 v5 VM for its new AI-powered Bing search engine, Edge web browser, and Microsoft Dynamics 365. The virtual machine is now available for preview and will come standard with the Azure portfolio. Prospective users can request access.

[ad_2]