[ad_1]

An Apple executive recently gave a detailed explanation of why the company is abandoning its CSAM detection feature.

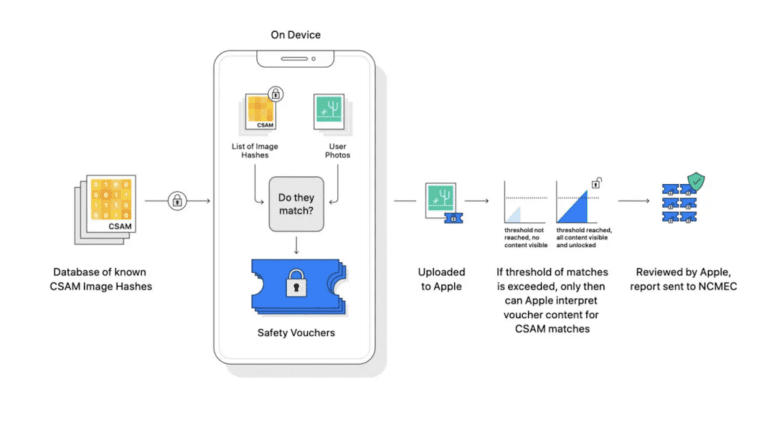

The Heat Initiative, a child safety group confronted Apple on the logic behind the CSAM detection exit. Sexually suggestive child abuse material is a major concern that Apple wanted to address using iCloud detection and on-device tools. However, the initiative was abandoned in December last year resulting in greater controversy.

Apple responded to Heat on why the tool was abandoned- Apple director of user privacy and child safety Erik Neuenschwander wrote that the act is ‘abhorrent’ and that they are ‘committed to breaking the influence and coercion that makes children susceptible to it’ He then explained that scanning private iCloud data will create ‘new threat vectors’ that could be exploited and ‘inject potential for a slippery slope and unintended consequences.’

iOS 17 will still have the feature, and Apple hopes to expand it to more areas when time comes.

[ad_2]