[ad_1]

The big picture: Nvidia’s new strategy is centered around generative AIs, large language models, and recommender systems, and so is its latest DGX supercomputer. The company believes these will soon form the “digital engines of the modern economy” as companies like Meta, Google, and Microsoft are racing to realize the benefits of AI using Nvidia’s Grace, Hopper, and Ada Lovelace hardware architectures.

By now it’s no secret that Nvidia has gone all-in on the idea of selling shovels to companies big and small that are manically digging in the land of generative AIs in search of digital treasure. The company is well-positioned to capitalize on this trend and could very well become the first chipmaker with a $1 trillion valuation – more than double that of TSMC, the company that makes more than half of the world’s most advanced chips.

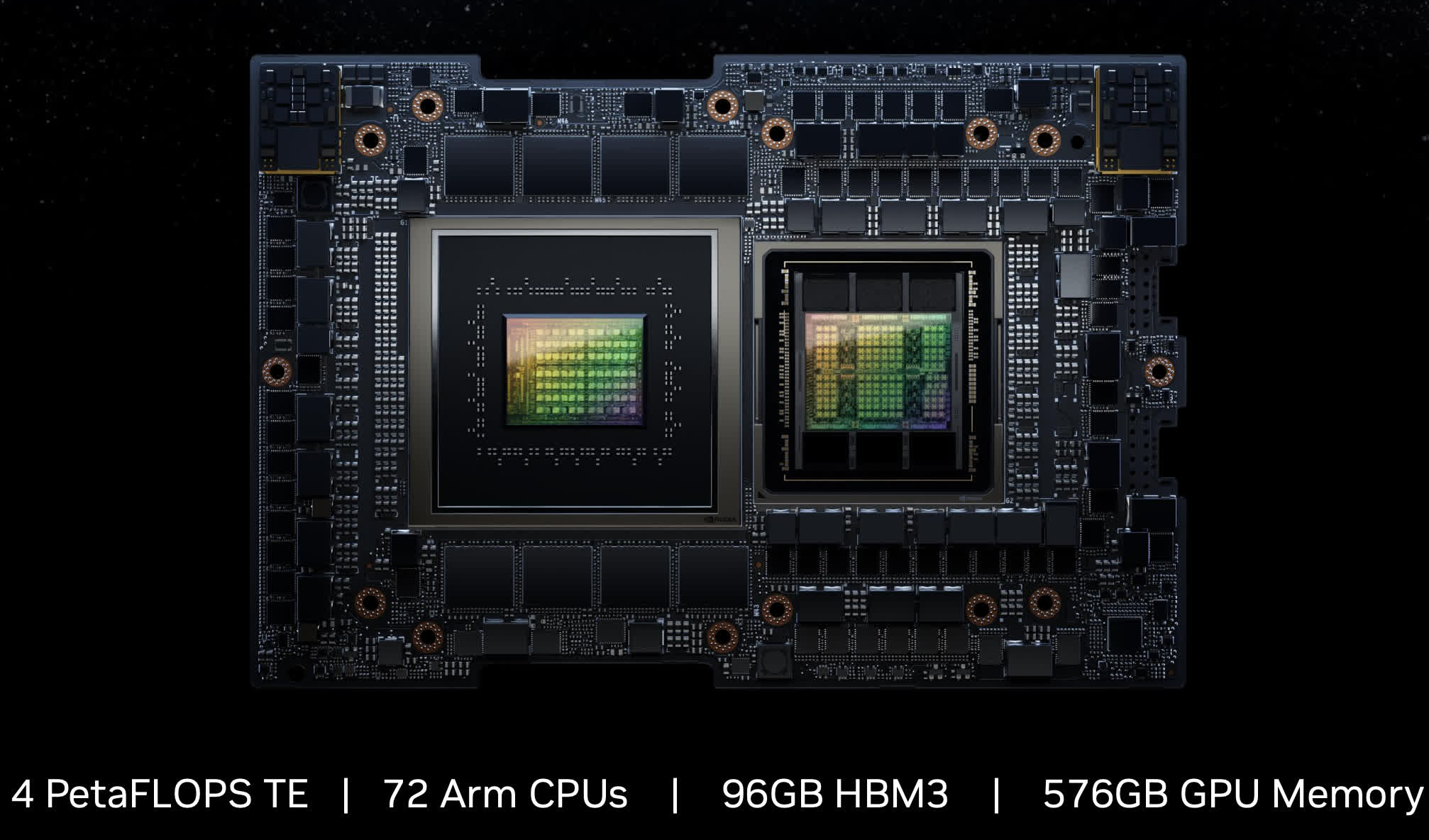

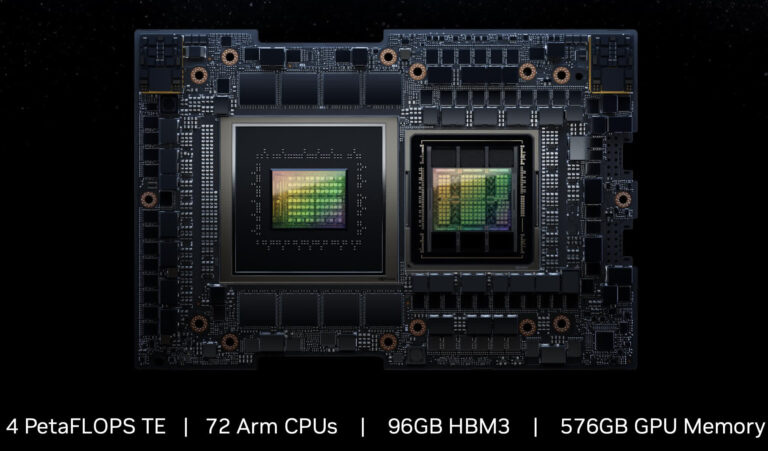

Nvidia’s announcements at Computex 2023 reflect this new strategy very well. Nvidia CEO Jensen Huang has revealed the company’s Grace Hopper GH200 super chips are now in full production, highlighting their potential for accelerating compute services and software for new business models and optimizing existing ones.

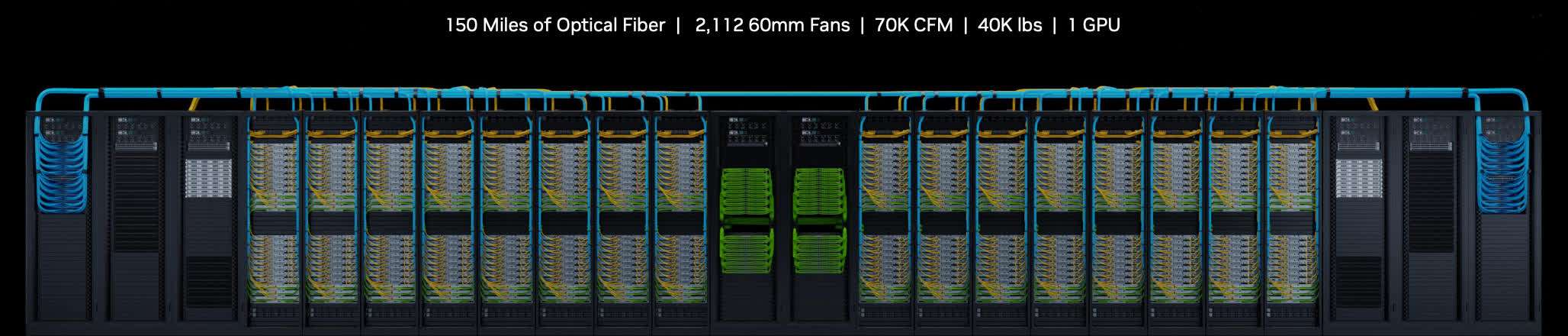

Huang says the tech industry has hit a hard wall with traditional architecture in recent years, which is why it’s been increasingly turning to GPUs and accelerated computing to solve complex computing tasks. To satisfy this surge in demand, Nvidia has developed a new DGX GH200 supercomputing platform that packs 256 Grace Hopper GH200 super chips.

Each Grace Hopper unit combines a Grace CPU and an H100 Tensor Core GPU, and the DGX GH200 system is supposedly able to deliver one exaflop of compute performance as well as ten times more memory bandwidth than the previous generation. For reference, the first exascale computer was the Frontier supercomputer at the Oak Ridge National Laboratory in Tennessee, which was able to reach 1.2 exaflops in the Linmark test last year, taking the crown away from the Japanese Fugaku system.

The DGX GH200 also comes equipped with 144 terabytes of shared memory – 500 times more than in the DGX A100 system it’s replacing. This should allow companies to easily build and run generative AI models like the one behind ChatGPT. Nvidia says Microsoft, Google Cloud, and Meta are among the first clients for the new supercomputer, while Japan’s SoftBank is looking to bring the GH 200 super chips to data centers across the Asian country.

Nvidia will also use four DGX GH200 systems linked using Quantum-2 InfiniBand networking with up to 400 Gb per second bandwidth to create its own AI supercomputer called Helios. Separately, the company is introducing over 400 different system configurations coming to market in the coming months that integrate the Hopper, Grace, and Ada Lovelace architectures for a variety of high-performance computing applications.

When standing next to a life-size visual representation of the DGX GH200 system on stage, Huang described it as “four elephants, one GPU,” since any GH200 unit has access to the entire 144-terabyte memory pool. He also tried humoring the audience by noting that he wondered if this new system could run Crysis. Given the fact that enthusiasts have been able to run the famous title right from a GeForce RTX 3090’s VRAM, you could probably run many thousands of simultaneous instances using a monster like the DGX GH200.

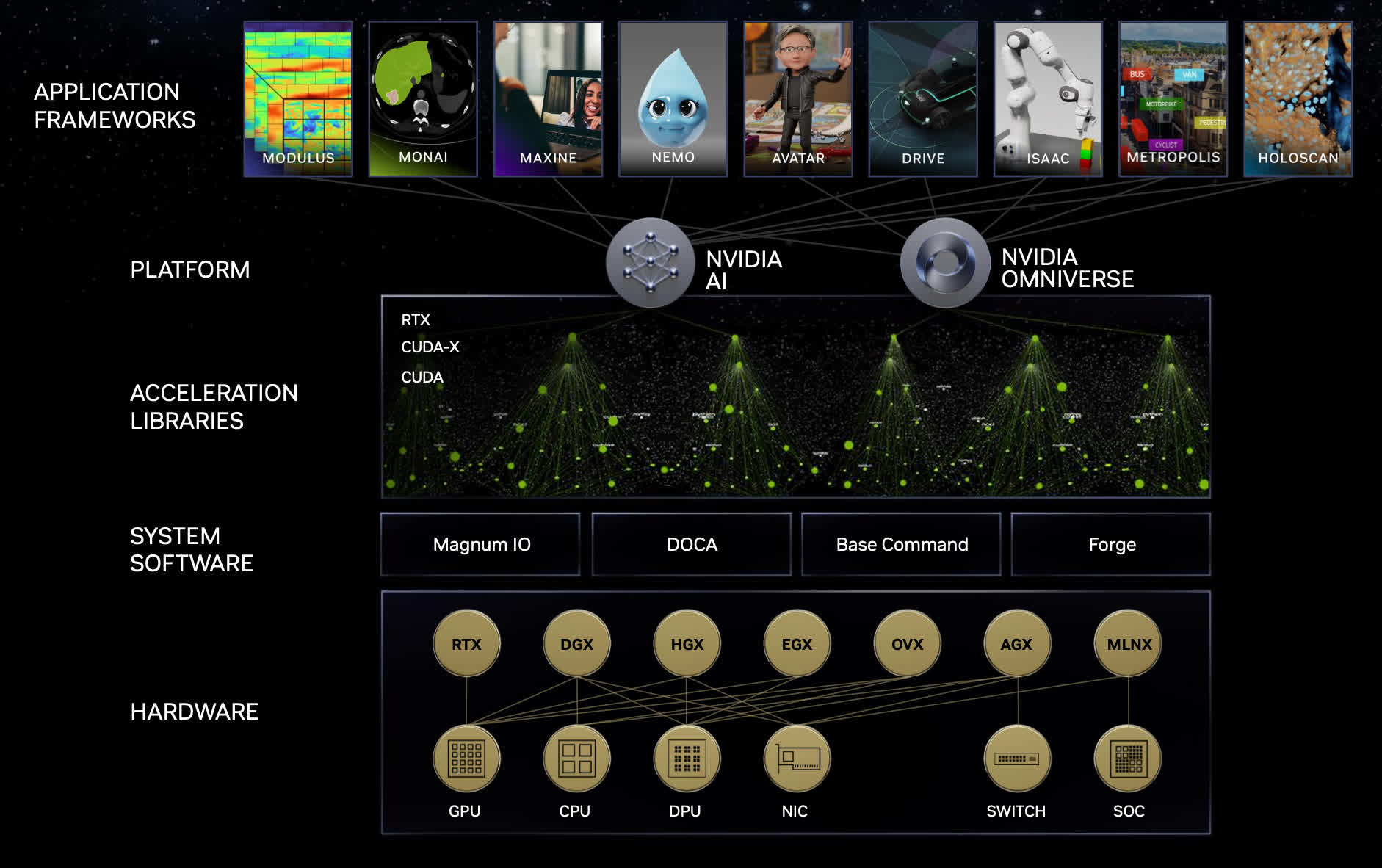

One thing is for sure: Nvidia is almost laser-focused on capitalizing on the AI chip boom as powering advancements in this area is what brings in over half of its revenues. The new DGX supercomputer is yet another attempt to keep the industry hooked on Nvidia products. Whether a company wants to power 5G networks, generative AI services, factory robots, augmented and virtual reality experiences, or advertising engines, Nvidia wants to be the go-to vendor for all enterprises looking to use accelerated computing.

Gamers are still on the company’s radar, albeit more through the lens of what AI can do to enhance gaming experiences. For instance, Nvidia’s newly-announced Avatar Cloud Engine for Games will allow developers to improve interactions with non-playable characters by linking them to a large language model. The company wouldn’t say what the systems requirements would look like for this new technology, but we do know Nvidia’s research arm is busy exploring ways to optimize game assets in future games using AI.

[ad_2]