[ad_1]

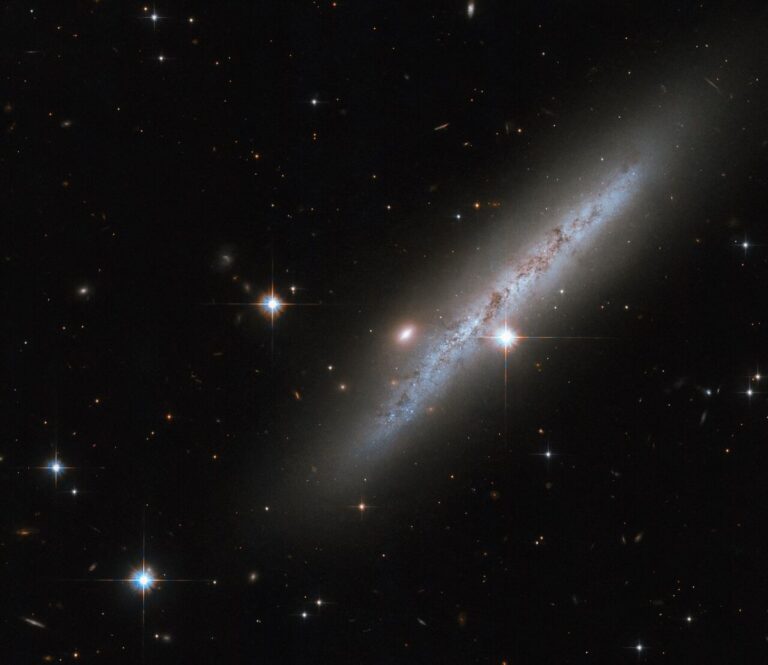

Since its launch in 1990, the Hubble Space Telescope has made more than one million observations of the cosmos, broadening humanity’s knowledge of the cosmos almost immeasurably.

Yet Hubble doesn’t see the universe as we do. The telescope views the cosmos across a broad range of light wavelengths, some of which our eyes are incapable of seeing.

If we looked out across space with our own eyes, not only would we not see much of what Hubble sees, but a great deal of what we do see would look very different from the images delivered to us by the iconic space telescope.

So how is the data this pioneering space telescope collects turned into stunning visuals that we can see, understand and marvel at, and how much of this imagery is “real?”

Related: The best Hubble Space Telescope images of all time!

What cameras does Hubble have?

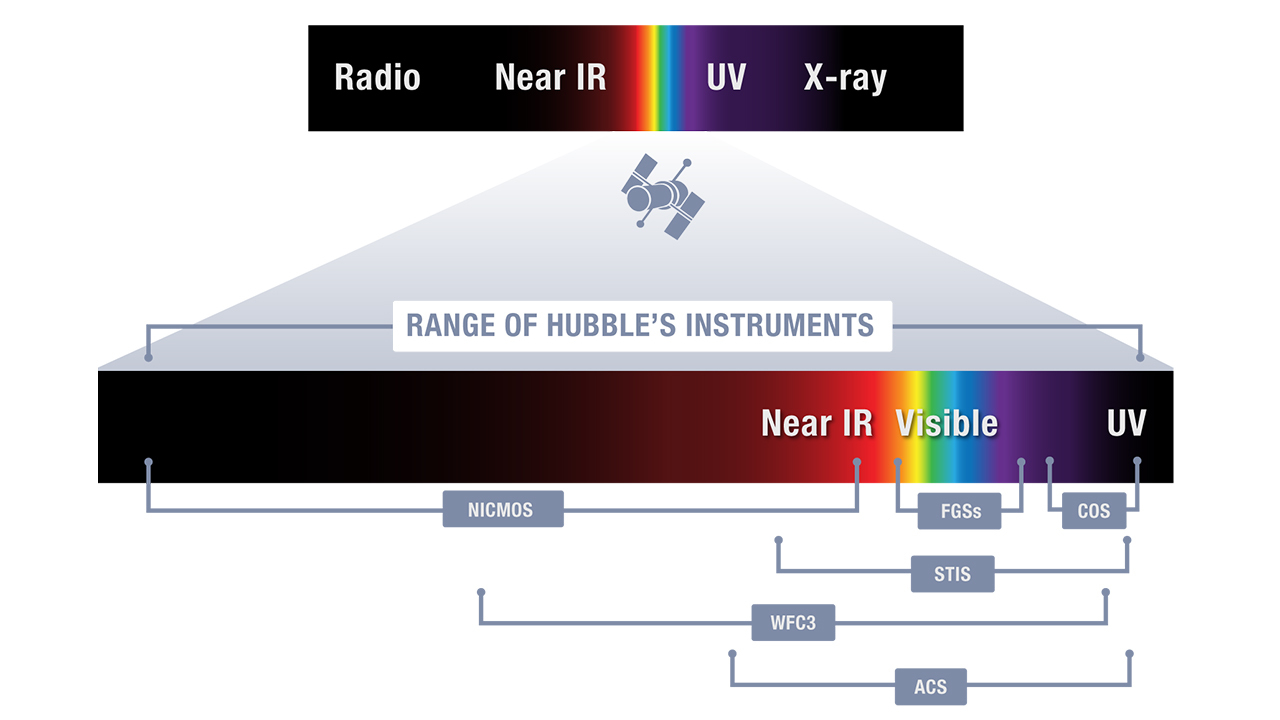

Hubble has two primary camera systems, according to NASA (opens in new tab), which the telescope uses to observe the universe from its position roughly 332 miles (535 kilometers) above Earth’s surface.

Working in unison, the Advanced Camera for Surveys (ACS) and the Wide Field Camera 3 (WFC3) are able to provide astronomers with wide-field imaging over a broad range of wavelengths. Both camera systems were installed on Hubble by spacewalking astronauts after the observatory’s April 1990 launch aboard the space shuttle Discovery.

ACS joined Hubble in 2002 and was designed primarily for wide-field imagery in visible wavelengths. The ACS system is composed of three cameras or “channels” that capture different types of images, allowing Hubble to perform surveys and broad imaging campaigns.

Two of those channels became inoperable in 2007 due to an electronics failure. Astronauts were able to repair one of the cameras two years later, restoring the ability of ACS to take high-resolution, wide-field pictures.

That 2009 repair was performed during Hubble’s Servicing Mission 4, which also installed the WFC3 system, now the telescope’s main imager.

Hubble had cameras prior to the installation of these instruments, of course. The space telescope’s previous cameras include the Wide Field and Planetary Camera, the Wide Field and Planetary Camera 2, the High-Speed Photometer and the Faint Object Camera.

Other observational devices among Hubble’s total of six instruments are its two spectrometers, the Cosmic Origins Spectrograph (COS), and the Space Telescope Imaging Spectrograph (STIS).

The spectrometers break light down so its component parts can be seen. Because elements and chemicals emit and absorb light at characteristic wavelengths, Hubble allows astronomers to learn about the composition of the objects it observes.

Related: NASA’s Hubble Space Telescope servicing missions (photos)

What wavelengths does Hubble see?

One of the advantages that Hubble has as it zooms around Earth about 15 times each day is that it can pick up wavelengths of light that would normally be absorbed by our planet’s atmosphere. As a result, the telescope’s 7.8-foot-wide (2.4 meters) primary mirror collects an immense amount of light across a wide range of wavelengths that ground telescopes can’t see and curves it toward the telescope’s instruments and cameras.

Hubble’s cameras can see the universe from the infrared region of the electromagnetic spectrum through visible light wavelengths all the way up to ultraviolet light.

The primary capabilities of the telescope are in the ultraviolet and visible parts of the spectrum from 100 to 800 nanometers, though the telescope can also see light with wavelengths as long as 2,500 nanometers.

ACS is used predominantly to collect light in visible wavelengths but is also capable of seeing ultraviolet and near-infrared light. Meanwhile, WFC3 provides wide-field imagery in ultraviolet, visible and infrared light.

While the STIS spectrometer works with a broad range of wavelengths, COS focuses exclusively on ultraviolet light and is considered the most sensitive ultraviolet spectrograph ever built.

COS has boosted Hubble’s sensitivity by a factor of at least 10 in the ultraviolet spectrum, resulting in a net 70-times sensitivity boost when looking at very faint objects, mission team members say.

Why does Hubble take pictures in so many wavelengths?

Hubble sees the universe in many different “shades of gray.” Viewing the cosmos in what to us looks like monochrome, Hubble is capable of highlighting subtle differences in the intensity of light at different wavelengths, which helps scientists understand physical processes and the composition of objects.

Observing ultraviolet light is especially useful when Hubble is looking at extremely faint objects and single points of light, like stars and quasars. Seeing in infrared, on the other hand, is vital for the examination of very distant objects that existed in the early history of the universe.

This is because, as light travels from these faraway objects, the expansion of the universe “stretches” its wavelengths. This process is called “redshifting,” because longer wavelengths of light in the visible spectrum are red (compared to blue at the shorter end).

The longer this light has been traversing the universe, the more extreme the redshift of its wavelength. This means that ancient objects that emitted visible light are now better seen in long-wavelength infrared light.

Hubble’s ability to study such stretched light from early stars has allowed scientists to better constrain the age of the universe, to around 13.8 billion years. The capability to see early galaxies and stars has also massively increased our understanding of how the universe has evolved since its early epochs.

The earliest and most distant object Hubble has been able to image thus far is the highly redshifted galaxy GN-z11, which is located about 13.4 billion light-years away.

From a sheerly practical angle, the ability to see in a wide range of wavelengths means that Hubble is useful to a broad spectrum of researchers working to observe a massive cornucopia of cosmic objects and events.

The wider range of wavelengths that Hubble can observe also means that it won’t be replaced by NASA’s new James Webb Space Telescope (JWST). Despite being the most powerful telescope humanity has ever put into orbit, JWST is mostly limited to highly detailed observations in infrared light, with Hubble seeing less clearly but over a much wider spread of the electromagnetic spectrum. This ultimately means that JWST and Hubble make an excellent team observing the cosmos.

Related: 12 astonishing photos by the James Webb Space Telescope

How ‘real’ are Hubble’s photos? What would we see?

Human eyes only see a small fraction of the electromagnetic spectrum that slots between infrared light and ultraviolet light, from about 380 to 700 nanometers. So, most raw images produced by Hubble look black and white to us.

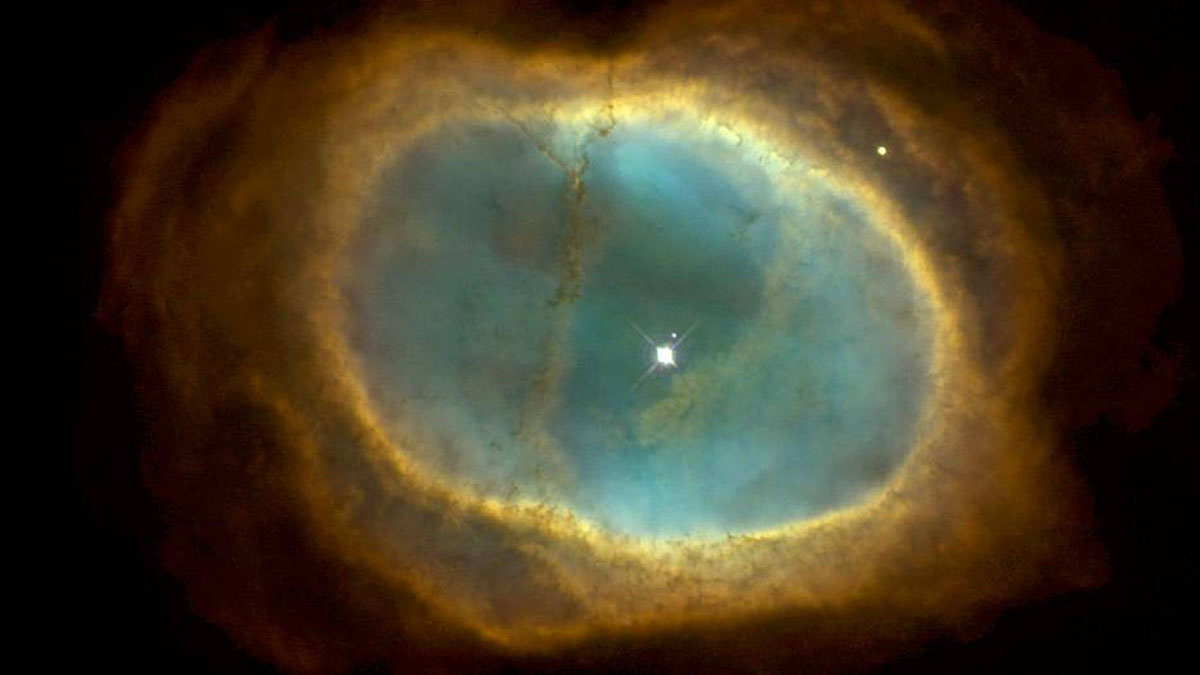

By the time Hubble images reach publications such as Space.com, they have been processed, with colors added to them. The colorization of Hubble’s “raw” grayscale images isn’t for purely aesthetic reasons, however. Nor is it done arbitrarily.

Some images show “true” colors, while others feature hues that are assigned to represent wavelengths of light that human eyes can’t see. These color composite images are generally created by combining exposures captured by Hubble using different filters.

A different color is assigned to each filter, with that color corresponding to the wavelength the filter allows through. So, the long-wavelength filter image is represented in the composite by red, the medium wavelengths by green and the shortest wavelengths by blue.

In some cases, colors might be added to Hubble photos to represent specific chemical elements present in or around the imaged object. Such color processing can reveal a wealth of scientific information that isn’t present in grayscale raw images from Hubble.

Images are also processed to remove imperfections and effects that don’t come from the objects being observed by Hubble. These undesired features could be the result of aging sensors causing “dead pixels” in images or the dynamic environment of space. For instance, Hubble images can be streaked by lines of bright light caused by passing asteroids, satellites or even flashing cosmic rays. The Hubble team often removes such distractions before releasing an image to the public.

In addition, larger mosaics made of many Hubble images stitched together must have gaps removed to create a single unified image. Hubble image processors also have to decide how to orient images, as there is no actual “up” or “down” in space.

The processing of Hubble images is an intricate and time-consuming procedure. Even simple Hubble photos can take days to process, while large complex mosaics of multiple images can take months, NASA says (opens in new tab).

Additional information

If you want to see what Hubble images look like before they undergo processing, a database of “raw images” is available at the Hubble Legacy Archive (opens in new tab).

If you’d like to learn more about the electromagnetic spectrum, NASA’s Imagine the Universe (opens in new tab) page has a great concise explanation.

Bibliography

How Hubble Images Are Made, NASA’s Goddard Space Flight Center, https://www.youtube.com/watch?v=QGf0yzdM5OA (opens in new tab)

Hubble Space Telescope, NASA. Accessed 03/24/23 from https://www.nasa.gov/mission_pages/hubble/spacecraft/index.html (opens in new tab)

Hubble Space Telescope Observatory : Instruments, NASA. Accessed 03/24/23 from https://www.nasa.gov/content/goddard/hubble-space-telescope-science-instruments (opens in new tab)

Hubble’s Science Instruments, NASA Hubblesite. Accessed 03/24/23 from https://hubblesite.org/mission-and-telescope/instruments (opens in new tab)

Hubble Space Telescope Observatory : Hubble vs. Webb, NASA. Accessed 03/24/23 from https://www.nasa.gov/content/goddard/hubble-vs-webb-on-the-shoulders-of-a-giant (opens in new tab)

[ad_2]