[ad_1]

What just happened? Researchers have found that popular picture creation models are susceptible to being instructed to generate recognizable images of real people, potentially endangering their privacy. Some prompts cause the AI to copy a picture rather than develop something entirely different. These remade pictures might contain copyrighted material. But what’s worse is that contemporary AI generative models can memorize and replicate private data scraped up for use in an AI training set.

Researchers gathered more than a thousand training examples from the models, which ranged from individual person photographs to film stills, copyrighted news images, and trademarked firm logos, and discovered that the AI reproduced many of them almost identically. Researchers from colleges like Princeton and Berkeley, as well as from the tech sector—specifically Google and DeepMind—conducted the study.

The same team worked on a previous study that pointed out a similar issue with AI language models, especially GPT2, the forerunner to OpenAI’s wildly successful ChatGPT. Reuniting the band, the team under the guidance of Google Brain researcher Nicholas Carlini discovered the results by providing captions for images, such as a person’s name, to Google’s Imagen and Stable Diffusion. Afterward, they verified if any of the generated images matched the originals kept in the model’s database.

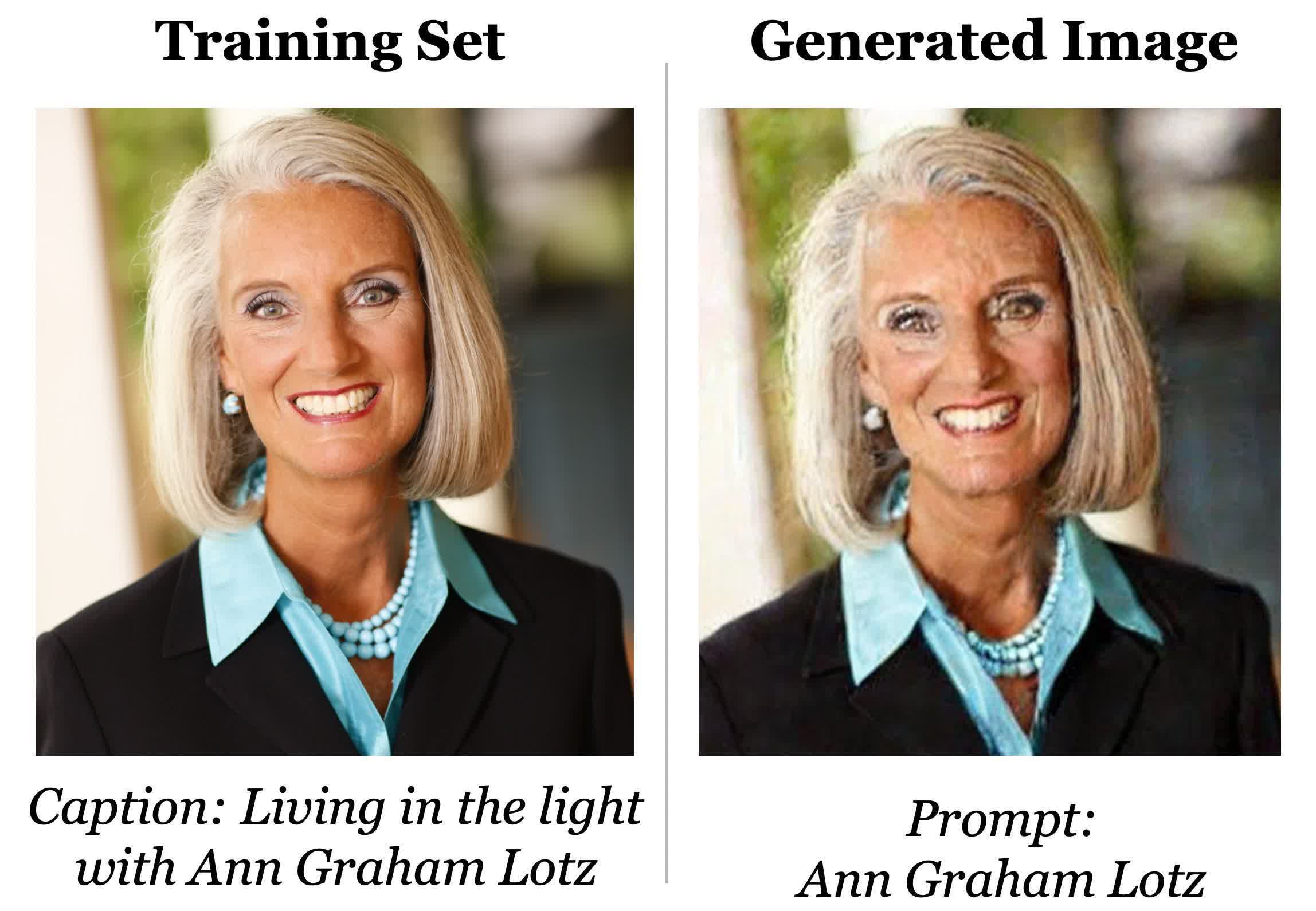

The dataset from Stable Diffusion, the multi-terabyte scraped image collection known as LAION, was used to generate the image below. It used the caption specified in the dataset. The identical image, albeit slightly warped by digital noise, was produced when the researchers entered the caption into the Stable Diffusion prompt. Next, the team manually verified if the image was a part of the training set after repeatedly executing the same prompt.

The researchers noted that a non-memorized response can still faithfully represent the text that the model was prompted with, but would not have the same pixel makeup and would differ from any training images.

Professor of computer science at ETH Zurich and research participant Florian Tramèr observed significant limitations to the findings. The photos that the researchers were able to extract either recurred frequently in the training data or stood out significantly from the rest of the photographs in the data set. According to Florian Tramèr, those with uncommon names or appearances are more likely to be ‘memorized.’

Diffusion AI models are the least private kind of image-generation model, according to the researchers. In comparison to Generative Adversarial Networks (GANs), an earlier class of picture model, they leak more than twice as much training data. The goal of the research is to alert developers to the privacy risks associated with diffusion models, which include a variety of concerns such as the potential for misuse and duplication of copyrighted and sensitive private data, including medical images, and vulnerability to outside attacks where training data can be easily extracted. A fix that researchers suggest is identifying duplicate generated photos in the training set and removing them from the data collection.

[ad_2]