[ad_1]

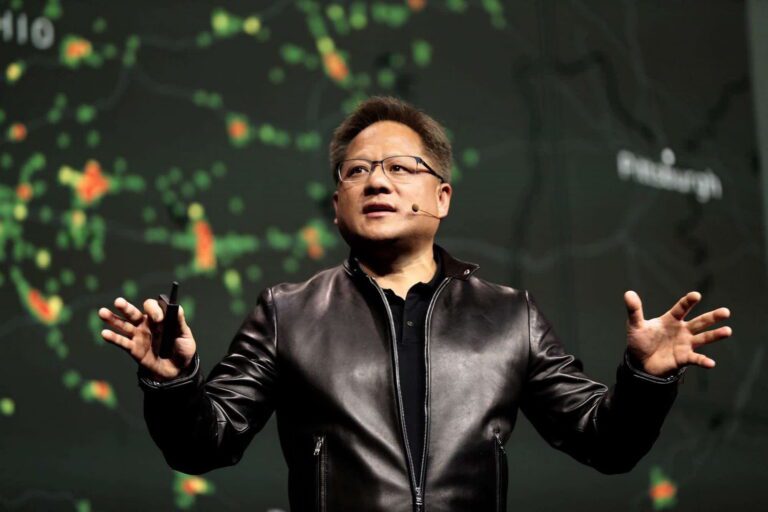

In context: The booming AI sector has propelled Nvidia’s financials to new heights, as its AI acceleration GPUs have become highly sought after. However, these general-purpose components are facing increasing competition, prompting the company to develop more efficient chips tailored for specific clients and tasks.

Confidential sources have told Reuters that Nvidia is embarking on a $30 billion venture to form a new unit for custom chip solutions. The products would focus on multiple sectors, with AI as the chief concern. The company has reportedly begun talks with Microsoft, Meta, Google, and OpenAI.

Aside from AI, the new unit might also design custom telecom, cloud, automotive, and gaming hardware. For example, much of rival AMD’s business comes from designing custom processors for Xbox and PlayStation consoles. Nvidia’s expensive push into custom hardware is likely a response to intensifying competition in the generative AI arena, which has ballooned the company’s profits.

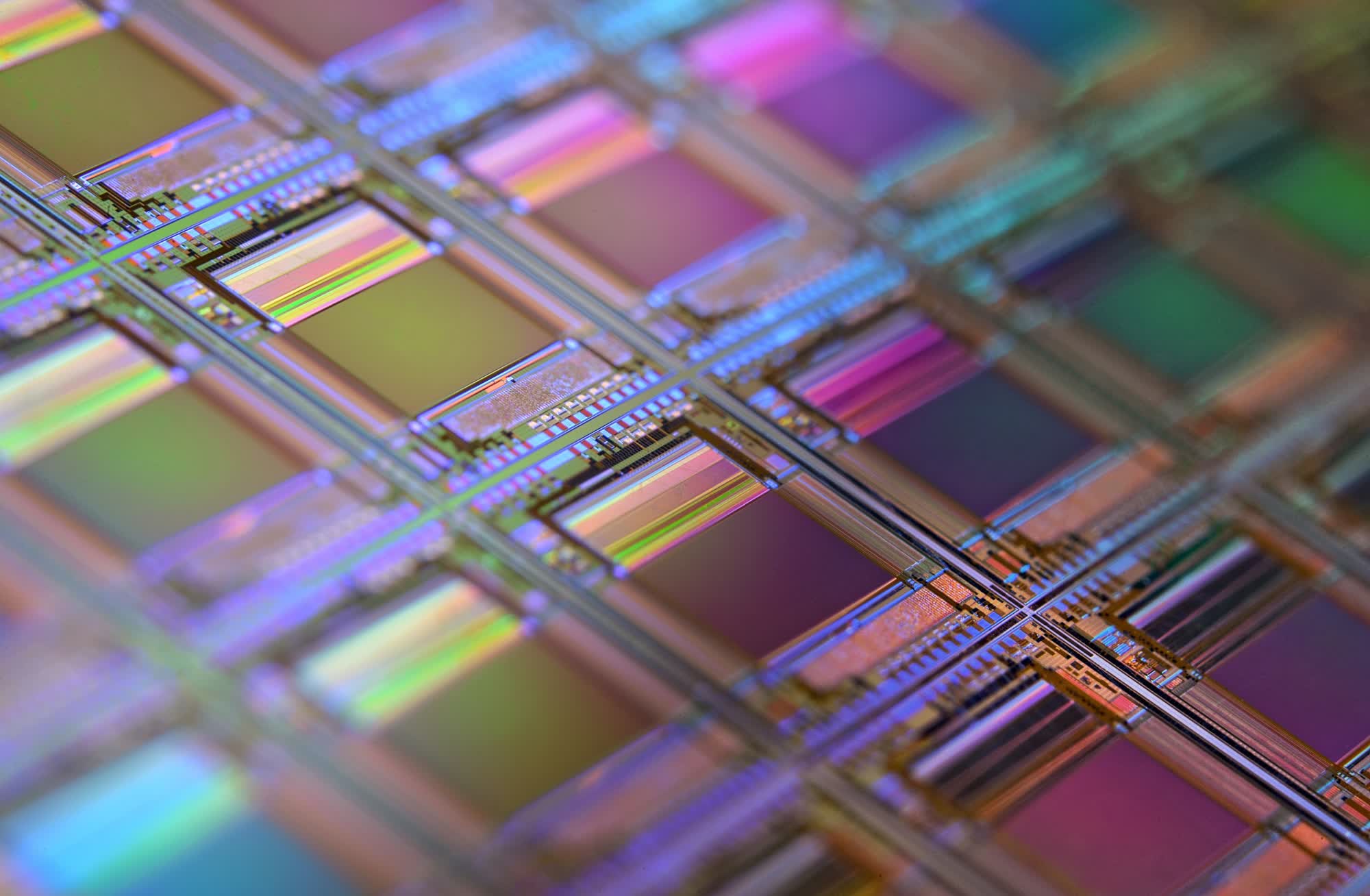

Since the beginning of the generative AI craze, Nvidia has dramatically boosted its stock price and market cap by selling H100 data center GPUs at a 1,000 percent profit. While the company doesn’t reveal its expenses, estimates indicate that manufacturing an H100 costs a few thousand dollars, while they sell for between $25,000 and $60,000.

Despite their astronomical price tag, clients can’t get enough of them. Meta plans to buy hundreds of thousands of H100s before the end of the year. The situation has pushed Nvidia’s shares to over $720 this week, up 50 percent since the beginning of the year and over 200 percent compared to 2022 – the company’s market cap of $1.77 trillion trails only slightly behind Amazon and Alphabet.

Nvidia’s H100, A100, and the new H200 AI chips currently dominate the general-purpose AI and high-performance computing workloads markets. However, increasingly heated competition is emerging from companies like AMD, which claims its MI300X outperforms the H100 at a fraction of the cost.

Furthermore, other companies are designing proprietary silicon, which affords greater control over costs and energy consumption. The energy footprint of generative AI is approaching that of small countries, so improving efficiency has become a primary area of research in the sector, where bespoke hardware might become essential. Late last year, Amazon revealed the Trainium2 and the Arm-based Graviton4, which promise significant efficiency gains.

[ad_2]